AI-first software delivery: The engine for scalable impact

By Thoughtworks

Implementing platform thinking to govern the development lifecycle is key to efficiently, securely and compliantly scale autonomous AI agents, says Thoughtworks’ Head of Tech Vanya Seth.

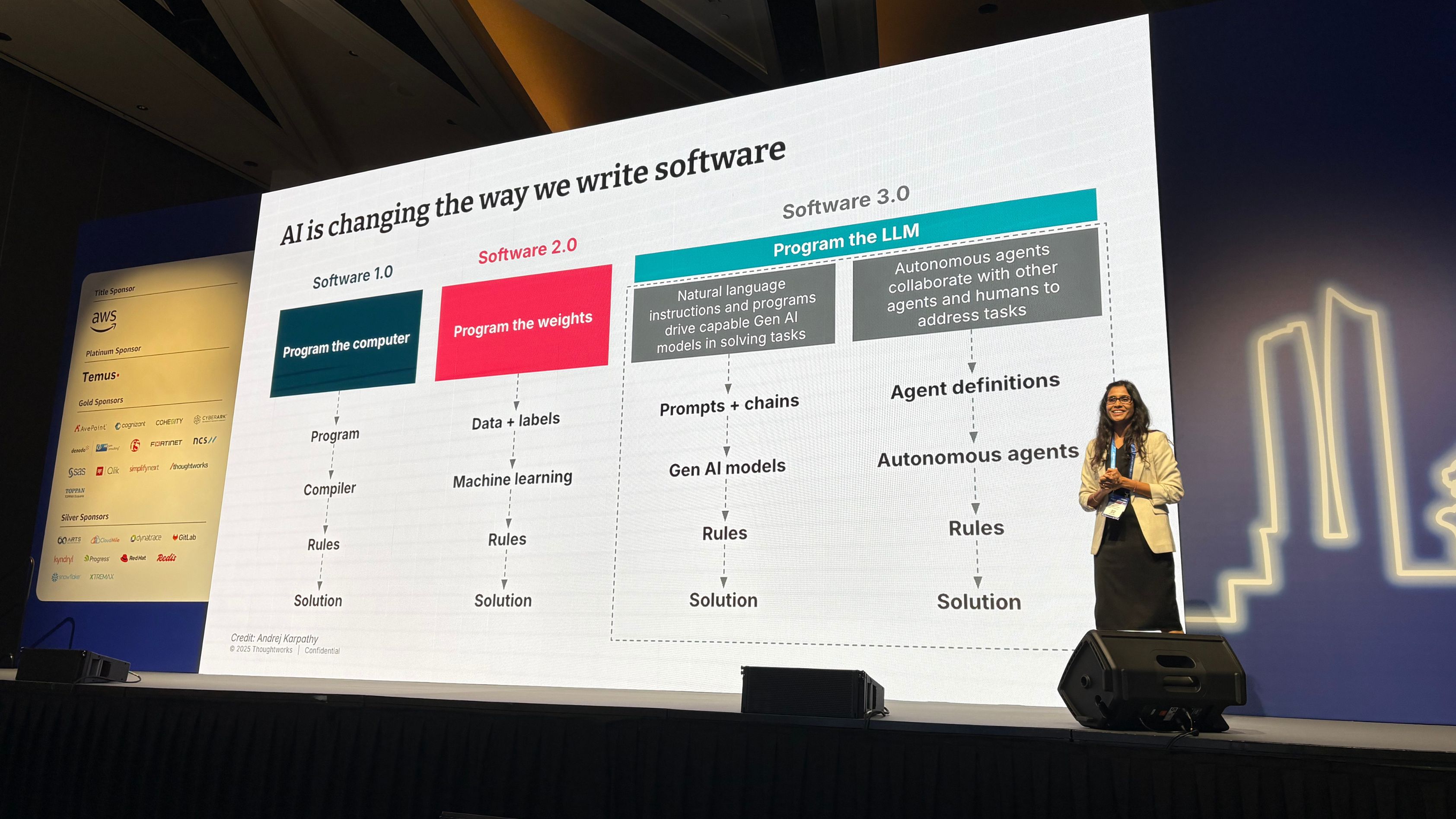

Thoughtworks' Head of Technology Vanya Seth was speaking about AI-First Software Delivery: Superpowers, adoption challenges, and the path to Software 3.0 at the AWS Public Sector Day in Singapore. Image: Thoughtworks

The rise of generative artificial intelligence (AI) and autonomous AI agents promises efficiency gains for the public sector.

But true transformation isn’t merely about using AI tools to automate individual tasks, but integrating them in a way that improves the development and service delivery processes.

According to Thoughtworks’ Head of Technology Vanya Seth, scaling AI agents must be grounded in platform thinking and rigorous governance to avoid chaos.

She was speaking about AI-First Software Delivery: Superpowers, adoption challenges, and the path to Software 3.0 at the AWS Public Sector Day in Singapore.

Adopting a systemic approach to scaling AI agents is key to transforming local productivity gains into systemic improvements that maximise public value efficiently and securely.

The illusion of local speed

Many organisations initially focus on using AI to amplify a single role, such as giving AI-powered coding assistants to developers.

While this provides a local speed increase – where developers might churn out more code - it creates pressure points elsewhere in the rest of the system.

Applying the Theory of Constraints (TOC), Seth illustrated how increased code outputs through AI might put pressure on downstream activities, such as product teams needing to hydrate the backlog, review pull requests at the same pace, and check their technical debt.

This imbalanced AI adoption also breaks the workflow. When AI tools operate in isolation, handovers between roles like business analysts, quality analysts and designers slow down.

Software development is a team sport. If the AI is only supercharging one role, the promised return on investment (ROI) would not be met across the entire software value chain.

For the public sector, this might translate to faster policy drafting that outpaces legal review, or when the speed of code generation overwhelms the security auditing process.

Unless the entire development and delivery lifecycle - including defining requirements, reviewing code, shipping, and managing quality - is addressed and improved, the new AI tools won't actually lead to faster, more effective business outcomes.

To subscribe to the GovInsider bulletin, click here.

Perils of ungoverned autonomy

For the public sector, the challenge of scaling AI agents isn’t just about the technology, but safeguarding citizen data, maintaining public trust and managing taxpayer’s money responsibly.

Without governance, the deployment of these powerful, autonomous tools is exposed to systemic risks that undermine these core public duties.

-1759377251818.jpg)

AI agents leverage a complex context that access multiple data sources and tools. With the agent’s ability to also access private information, external communication, and malicious input, it leaves them susceptible to what is called the lethal trifecta.

This exposure was why Seth warns of context poisoning, citing a scenario where a malicious input could trick an agent into exposing private information from confidential repositories onto a public-facing file.

For civil servants, this risk is compounded by strict regulations regarding data residency and the handling of sensitive client information.

Public agencies must be able to address the fundamental governance questions, which includes having clarity on model hosting options and staff’s AI usage.

There is also the issue of cost. Without visibility on agent usage, the organisation might end up incurring a huge bill.

The cost of some AI tools is already rising rapidly, and without being careful about how these AI tools are being used, a lack of visibility on the costs incurred might strain public budgets.

The solution: Platform thinking and agentic delivery platform

To move past tool fragmentation and mitigate these risks, the recommendation is to apply platform thinking to the development environment itself.

This approach is embodied in an agentic delivery platform, which is a structured, logical architecture designed to provide a better handle on how the agents are being used.

The platform requires several key capabilities to ensure efficient, compliant and cost-effective scaling.

These capabilities included an AI gateway that provides central oversight of all traffic from AI agents; a secure model catalog and blueprint (MCP) store to ensure that agents tap on secure and sourced from internal, approved contextual knowledge; and guardrails to prevent sensitive information from leaving the agency’s boundary.

This platform allows agencies to create specialised workflows, which are strategic use cases that are specifically relevant to its own needs, rather than adopting a generic, "cookie-cutter" solution.

The key takeaway was that scaling AI is a change management problem that requires AI literacy and a shift in mindset.

Seth emphasises that engineering practices, like strong continuous integration (CI) and continuous delivery (CD) pipelines, modular code and fast feedback loops, matter now more than ever.

This is since AI would only amplify the existing quality of the codebase, whether for good or bad.

For the public sector, the goal must be to create a trusted, controlled environment where AI agents are a secure, collective accelerator of the entire system.