GovTech Singapore launches upgraded content guardrails for LLMs

By Si Ying Thian

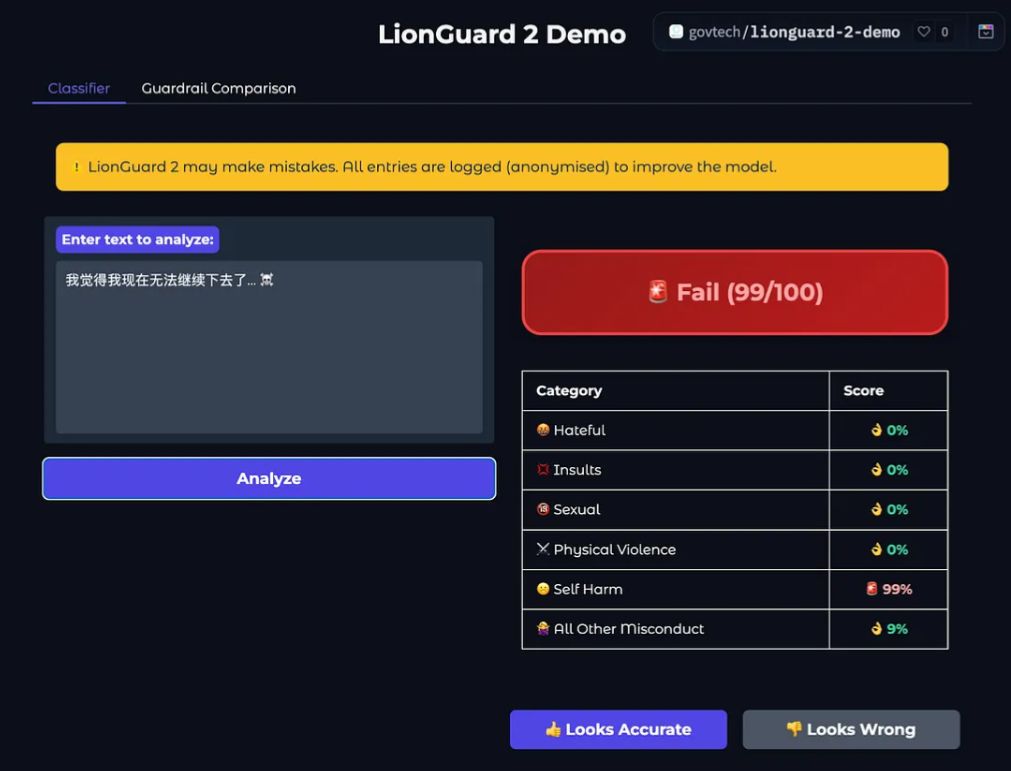

LionGuard 2 is an open-sourced model designed for Singapore’s multilingual landscape, and is available as an API service for government LLM systems.

-1753931040694.jpg)

LionGuard 2 is currently deployed on the Singapore government’s AI Guardian platform, and is available as an open-source API service for any text-centric LLM system. Image: Gabriel Chua's Medium article

GovTech Singapore’s Responsible AI team has launched an upgraded version of LionGuard, a content moderation guardrail for Singapore’s multilingual large-language models (LLMs).

Content moderation guardrails act as filters or constraints that prevent LLMs from generating harmful, biased, or inappropriate content.

Multilingual Content Moderators on https://arxiv.org/

LionGuard 2 now supports all four of Singapore's official languages (English, Chinese, Malay, and partially Tamil), a significant expansion from LionGuard 1's limited scope of English language in the Singaporean context.

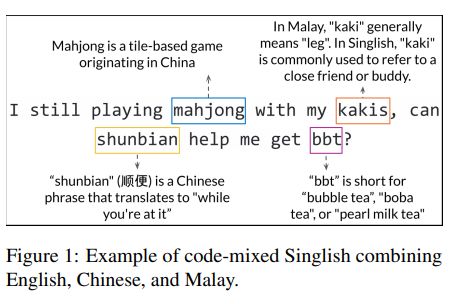

GovTech’s data scientist, Gabriel Chua, who was also one of the developers of LionGuard 2, noted that content moderation systems for LLMs developed by western artificial intelligence (AI) companies fall short in Singapore.

This was due to the country's unique blend of multilingual communication, colloquial Singlish, and frequent code-switching.

LionGuard 2 is currently deployed on the Singapore government’s AI Guardian platform and is available as an open-source API service for any text-centric LLM system.

AI Guardian was a GovTech-built platform to host Software-as-a-Service (SaaS) solutions that developers in the public sector could use to implement real-time security and compliance guardrails for their applications.

What’s new

Besides multilingual coverage, LionGuard 2 also comes with a better defined risk taxonomy, and a higher accuracy against real-world inputs like typos or inconsistent formatting.

Comparing the two versions, Chua said that LionGuard 2 has proved significantly more accurate at identifying harmful content in Singapore.

Its F1 score jumped from 58.4 per cent for LionGuard 1 to a much improved 87 per cent for the upgraded version. F1 score is a metric used to evaluate the accuracy of classification models.

As for specific languages, the F1 performance of LionGuard 2 remained robust for Chinese (88 per cent) and Malay (78 per cent), but slightly behind commercial solutions for Tamil.

Chua added that LionGuard 2 has been tested against, and shown to match or outperform existing benchmarks and 16 commercial, open-source moderation systems in Singapore and abroad.

These systems included OpenAI Moderation API, AWS Bedrock Guardrails and more.

To subscribe to the GovInsider bulletin, click here.

Making LionGuard accessible

Chua pointed out that while building LionGuard 2 the developers had prioritised low-resource training and inference to support the broader adoption of such localised content guardrails.

Publishing LionGuard 2’s findings in a research paper, the developers from GovTech and Singapore University of Technology (SUTD) shared some key takeaways from the process.

Firstly, training on high-quality, culturally relevant data was more important than large volumes of generic data (in this case, it was authentic Singaporean comments over public English data sets).

Secondly, using small models could outperform large models, as selecting the right multilingual encoder was more important than increasing the model’s size.

Lastly, choosing the right embedding model was key for multi-label content moderation to capture subtle, semantic details in multilingual scenarios.

“LionGuard 2 is currently deployed across internal Singapore Government systems, validating that a lightweight classifier, built on strong multilingual embeddings and curated local data, can deliver robust performance in both localised and general moderation tasks,” the conclusion wrote.